Ever noticed how you check your phone before you’ve even brushed your teeth in the morning? You’re not alone – the average American spends 4.5 hours daily scrolling through social media feeds engineered to keep you hooked.

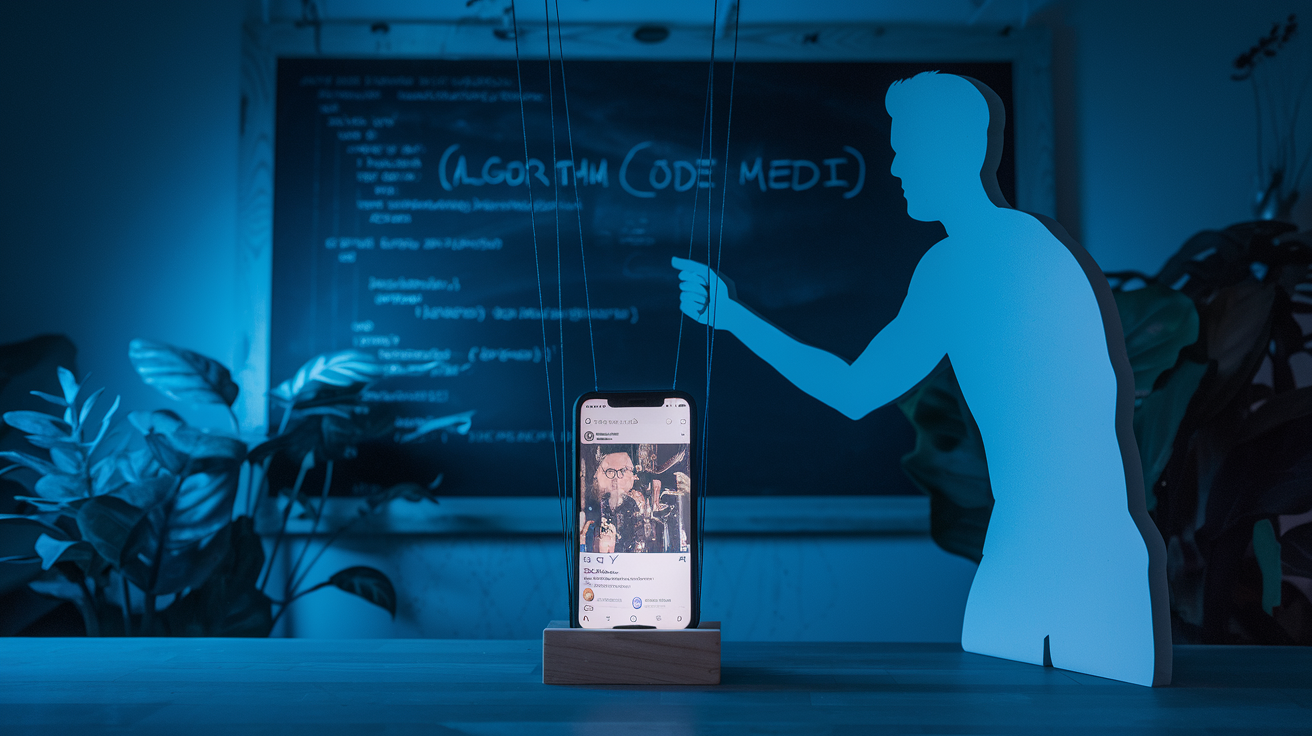

I’m not here to lecture you about screen time. I’m here to expose what’s really happening behind those perfectly curated social media algorithms that most of us have welcomed into every corner of our lives.

The dark side of social media isn’t just about wasted time – it’s about how these platforms deliberately manipulate your attention, emotions, and even your self-worth without your conscious awareness.

By the time you finish reading this, you’ll never look at that notification bell the same way again. And that’s exactly what Big Tech is afraid of.

Addictive Design: How Social Platforms Hook You

The Psychology Behind Infinite Scrolling

The next time you’re mindlessly scrolling through TikTok at 2 AM when you should be sleeping, know this: it’s not your fault. Those platforms are literally designed to keep you scrolling forever.

Infinite scrolling isn’t just a convenient feature – it’s a carefully crafted trap. Ever notice how there’s no natural stopping point? That’s intentional. Your brain loves completion, but infinite scrolling deliberately denies you that satisfaction. Just one more video, one more post…and suddenly it’s dawn.

Tech companies employ armies of behavioral psychologists to exploit what’s called the “variable reward” mechanism. It’s the same psychological trick that makes slot machines so addictive. You never know when the next scroll will deliver something amazing, so you keep going.

Notification Systems Designed to Create Dopamine Loops

Those little red dots and pings? They’re not innocent alerts – they’re dopamine dealers.

Each notification triggers a tiny hit of dopamine, the feel-good neurotransmitter that’s central to addiction. Social media companies have perfected what psychologists call “intermittent variable rewards” – unpredictable, occasional rewards that keep you checking your phone 150+ times daily.

The evidence is damning:

- Instagram delays some notifications to deliver them in strategic batches

- Twitter’s notification system creates artificial urgency with time-limited content

- Facebook’s algorithm identifies exactly when you’re most likely to respond to alerts

Your phone buzzes. You check it. Dopamine releases. Repeat hundreds of times daily. That’s not a feature – it’s a dependency cycle.

Color Schemes and Sounds That Trigger Emotional Responses

Nothing about a social media app’s design is accidental. That particular shade of blue in Facebook? Carefully tested to maximize trust and engagement. The satisfying “swoosh” when you send a message? Designed to give you a micro-dose of pleasure.

Color psychology runs deep in these platforms:

- Red creates urgency (notification dots)

- Blue evokes trust and reliability (Facebook, Twitter, LinkedIn)

- Bright colors trigger excitement and impulsivity (TikTok, Snapchat)

Even the haptic feedback (those tiny vibrations) when you interact with apps is fine-tuned to deliver sensory satisfaction that keeps you coming back for more.

How “Time Well Spent” Is Actually “Time Well Stolen”

Social media companies measure success in “engagement minutes” – a fancy way of saying “how much of your life we captured today.”

When Mark Zuckerberg talks about “meaningful social interactions,” what he’s really describing is more time spent on his platforms. The average person will spend nearly 7 years of their life on social media. That’s not connection – that’s extraction.

The most telling evidence? The people who build these platforms often don’t use them:

- Steve Jobs limited his kids’ tech use

- Many Silicon Valley execs send their children to low-tech schools

- Former Facebook executives have publicly admitted they avoid the very platforms they helped create

They know what we’re just figuring out: this technology isn’t designed to serve us – we’re designed to serve it.

Data Mining: Your Digital Life as a Commodity

The True Value of Your Personal Information

Your data isn’t just information—it’s gold. Every post you like, video you watch, and link you click builds a profile worth serious cash.

Facebook reportedly earns around $84 per user annually in the US. Google? About $150. That’s what advertisers pay to access you.

But here’s the kicker: they’re probably undervaluing your data. When platforms combine your browsing habits, location history, purchase patterns, and social connections, they create predictive models worth billions. Your digital footprint helps companies forecast market trends, manipulate consumer behavior, and even influence elections.

Ever wondered why that specific ad follows you everywhere? Your data made that happen. And you got… what exactly in return?

How Platforms Track You Even When You’re Not Using Them

Closed Facebook? Logged out of Google? Doesn’t matter—they’re still watching.

Those innocent-looking “Like” and “Share” buttons on websites? They’re tracking pixels that monitor your activity across the internet. Facebook’s tracking infrastructure exists on over 8 million websites.

Shadow profiles are even creepier. Social media platforms collect data on people who don’t even have accounts. When your friend uploads their contacts or tags you in photos, platforms gather information about you without consent.

Cross-device tracking follows you from your phone to your laptop to your smart TV. That YouTube video you watched on your phone magically generates related recommendations on your computer later.

Third-Party Data Sharing You Never Consented To

The fine print you skipped in those terms of service? It probably included permission to share your data with “partners”—a conveniently vague term covering thousands of companies.

Data brokers like Acxiom and Experian collect, package, and sell your information to anyone willing to pay. These companies maintain profiles on virtually every adult in developed countries.

What’s shared without your meaningful consent?

- Your location history (down to specific stores you’ve visited)

- Health information inferred from your searches and app usage

- Financial vulnerability indicators (are you likely to need a loan?)

- Political leanings and voting likelihood

- Relationship status changes before you announce them

The Permanent Digital Record You Can’t Erase

The internet never forgets.

That embarrassing post from 2011? Archived. The angry tweet you deleted? Screenshotted. Your search history? Stored indefinitely.

Data deletion requests often only remove information from public view—not from company servers. When you “delete” your account, platforms typically retain your data for “business purposes” or “security.”

Even anonymized data isn’t truly anonymous. Researchers have repeatedly demonstrated that “de-identified” data can be re-identified with surprising accuracy by cross-referencing multiple data sets.

The EU’s “right to be forgotten” legislation attempted to address this, but it’s a drop in the ocean. Most of your digital footprint remains permanent and indelible.

How Free Services Actually Cost Your Privacy

“If you’re not paying for the product, you are the product.”

That free email account? It costs you every private conversation. That social media platform? Paid for with intimate knowledge of your relationships and interests.

The average person would need to spend 76 working days annually to read all the privacy policies they agree to. Companies know this—they’re counting on it.

The true cost breakdown:

| Service | What You Get | What You Pay With |

|---|---|---|

| Gmail | Free email | Access to all message content |

| Photo sharing | Biometric data, location tracking | |

| TikTok | Entertainment | Behavioral patterns, influence susceptibility |

| Google Maps | Navigation | Your complete movement history |

When tech companies say they’re “improving user experience,” they’re often building better ways to extract and monetize your data.

Algorithm Manipulation: Controlling What You See and Think

Echo Chambers and Confirmation Bias By Design

Think your social media feed shows you everything? Think again.

Social media platforms have mastered the art of keeping you comfortable—and it’s not for your benefit. They’re building walls around your digital experience, creating echo chambers that reflect your existing beliefs right back at you.

Ever wonder why your uncle sees completely different content than you do? That’s not an accident. The algorithms are designed to analyze your clicks, views, and engagement patterns, then serve you more of what you already like.

When was the last time you saw a viewpoint that truly challenged you? Platforms deliberately filter out opposing perspectives because comfortable users stay longer. And longer stays mean more ad revenue.

The psychological trick at play is confirmation bias—our natural tendency to embrace information that supports what we already believe. Social media giants don’t just accommodate this bias; they weaponize it.

A recent study found that 64% of users are completely unaware of how filtered their feeds actually are. Most believe they’re seeing a neutral representation of reality.

How Content Is Selected to Maximize Engagement, Not Truth

The brutal truth? Social media platforms don’t care if what you’re seeing is accurate. They care if you’ll click, comment, or share.

Their algorithms prioritize content using a simple formula:

| Content Type | Algorithm Priority |

|---|---|

| Emotional triggers | Extremely High |

| Controversial claims | Very High |

| Sensational headlines | High |

| Nuanced factual content | Low |

| Complex explanations | Very Low |

When Facebook tweaked its algorithm in 2018, they knew exactly what would happen. Internal documents later revealed they understood it would boost divisive content—but engagement metrics jumped 5%, so they moved forward anyway.

Content selection happens in milliseconds, with machine learning systems analyzing thousands of data points about you. They’re predicting what will keep your eyes glued to the screen, not what will inform you accurately.

The Radicalization Pipeline Across Platforms

The rabbit hole is real—and it’s getting deeper.

YouTube’s recommendation engine has become notorious for its “radicalization pipeline.” Start with a mildly political video, and within six clicks, you could be watching extreme content you’d never seek out deliberately.

This isn’t isolated to one platform. There’s a cross-platform effect that works like this:

- You engage with moderately controversial content on Facebook

- Similar but slightly more extreme content appears in your Instagram feed

- TikTok’s algorithm notices and serves even more radical versions

- YouTube recommendations complete the journey with the most extreme content

Former tech engineers have blown the whistle on this system. One confessed: “We built recommendation engines knowing they would lead users to increasingly extreme content, but metrics were all that mattered in our performance reviews.”

Why Outrage and Extremism Generate More Revenue

Outrage is big business. And extremism? That’s the premium product.

When you’re outraged, you do everything platforms want: you stay longer, comment more, share wider, and return frequently to check responses. Your emotional reaction is literally being monetized.

The numbers tell the story. Content that triggers anger gets:

- 2.2× more shares than positive content

- 3.5× longer view times

- 4.7× more comments

A high-level Facebook executive admitted in leaked emails: “Conflict is attention-grabbing. Attention equals revenue. It’s simple math.”

Platforms have mastered emotional manipulation through precise testing. They know exactly which shades of red increase agitation, which notification sounds trigger dopamine release, and which headline formats provoke the strongest reactions.

While moderate, factual content might get a passing glance, extreme viewpoints create the kind of engagement that translates directly to advertising dollars.

Mental Health Consequences They Don’t Advertise

A. Rising Depression and Anxiety Rates Linked to Social Media

Think scrolling is just killing time? It might be killing your mental health too.

The stats are alarming. Studies from Harvard and Stanford show people who use social media platforms for more than 2 hours daily experience a 70% increase in depressive symptoms compared to those who limit usage to 30 minutes. And it’s not just correlation—researchers have established causation through controlled studies where participants who took social media breaks showed significant mood improvements within just two weeks.

What’s happening here isn’t rocket science. Your brain gets flooded with carefully curated highlights from everyone else’s lives while you’re sitting in your pajamas at 2 PM on a Tuesday. Your nephew got into Harvard, your colleague bought a beach house, and your high school nemesis somehow looks better at 35 than 18.

Meanwhile, algorithms are designed to keep you engaged through emotional triggers—outrage, envy, fear—not to make you feel balanced and content.

B. Body Image Distortion and Digital Dysmorphia

Ever heard of “Snapchat dysmorphia”? It’s when people want plastic surgery to look like their filtered selfies.

This isn’t a joke. Plastic surgeons report a 30% increase in patients requesting procedures to match their digitally altered appearances. We’ve created a world where people are disappointed they don’t look like… themselves.

Filters aren’t harmless fun anymore. They’ve morphed into digital beauty standards that nobody—not even the influencers posting them—can actually meet in real life. Those perfect skin textures? Digitally smoothed. That jaw definition? Often AI-enhanced.

Young girls are particularly vulnerable. By age 13, 80% report using digital enhancements on their photos, and 65% say they feel “ugly” without filters. The psychological impact runs deep—studies link these distortions to eating disorders, body dysmorphic disorder, and severe self-esteem issues.

C. FOMO and Social Comparison’s Toll on Self-Esteem

The fear of missing out isn’t new, but social media has supercharged it.

Every scroll becomes a mini-referendum on your life choices. Should you have gone to grad school? Traveled more? Had kids earlier/later/at all? Bought Bitcoin in 2010?

The psychological toll is measurable. Research published in the Journal of Social and Clinical Psychology found that limiting social media use to 30 minutes daily led to significant reductions in loneliness and depression. Why? Because constant comparison is poison for your mental health.

What makes social comparison on platforms like Instagram particularly toxic is its asymmetrical nature. You’re comparing your behind-the-scenes reality to everyone else’s highlight reel. That friend posting about their “spontaneous” beach weekend? They took 47 photos to get that one “casual” shot, and they’re actually fighting with their partner off-camera.

D. Cyberbullying’s New, More Devastating Forms

Bullying has evolved from playground pushes to something far more insidious.

Today’s cyberbullying follows victims everywhere—into their bedrooms, bathrooms, dinner tables. There’s no safe space when your tormentors can reach you through the device in your pocket.

The numbers are staggering. About 59% of teens have experienced cyberbullying, with 42% reporting they’ve been bullied on Instagram specifically. And the consequences? Teen suicide attempts have increased by 25% since social media became ubiquitous.

What’s uniquely cruel about digital harassment is its permanence and audience size. A humiliating moment that might have been witnessed by a few dozen peers in pre-internet days can now be seen by thousands, archived forever, and resurface years later. The platforms claim to care, but their reporting mechanisms remain inadequate, often requiring victims to continually encounter their abusers’ content.

E. Sleep Disruption Patterns From Late-Night Scrolling

Your 1 AM TikTok binges aren’t just wasting time—they’re rewiring your brain.

The blue light from screens suppresses melatonin production, but that’s just the beginning. The dopamine hits from notifications and new content create a perfect storm for sleep disruption.

Sleep researchers at UCLA found social media users get 41 minutes less sleep than their less-connected peers. They also experience poorer quality sleep, with more disruptions and less REM—the stage crucial for emotional processing and memory consolidation.

The consequences cascade beyond just feeling tired. Sleep deprivation amplifies anxiety, weakens immune function, and impairs cognitive performance. It’s a vicious cycle: you feel bad, so you scroll for comfort, which makes you sleep poorly, which makes you feel worse.

Most concerning is how platforms are specifically engineered to override your natural sleep cues. Features like autoplay, infinite scroll, and strategically timed notifications aren’t accidents—they’re designed to keep you engaged past the point your body is begging for rest.

Social Engineering at Scale

How Political Opinions Are Subtly Shaped

Ever wondered why your social media feed seems to magically align with your existing views? That’s no accident. Big Tech platforms use sophisticated algorithms that track every like, share, and pause to build a profile of your political leanings.

These algorithms don’t just predict your views—they reinforce and gradually push them further. You clicked on one immigration article? Here’s ten more with slightly stronger opinions. Enjoyed that tax policy video? Here’s another that takes the concept just a bit further.

What’s scary is how invisible this process is. No one tells you, “Hey, we’re slowly shifting your worldview!” Instead, it happens through thousands of small nudges over months and years.

The most powerful technique? Emotional triggers. Content that makes you angry or afraid spreads 6x faster than neutral information. Platforms know this and serve up exactly what gets you riled up—because engaged users mean advertising dollars.

Manufactured Consensus and Artificial Trends

The trending topics you see aren’t what’s naturally popular—they’re what algorithms want you to believe is popular.

Social media platforms don’t just reflect public opinion—they actively manufacture it. Through selective amplification, certain viewpoints get boosted while others get buried. The result? A completely distorted view of what “everyone” thinks.

Think about it. When you see a hashtag trending, you assume thousands of real people care about that issue. But increasingly, these trends are:

- Started by coordinated groups

- Amplified by bot networks

- Boosted by algorithms because they drive engagement

- Shown to specific audience segments to shape perception

This creates a powerful social pressure. You see something trending and think, “Wow, everyone feels this way.” But in reality, you’re looking at an engineered consensus designed to make you feel like the odd one out if you disagree.

The Illusion of Choice in a Curated Information Environment

You might think you’re freely browsing information online. The harsh truth? You’re walking through a carefully designed maze where every turn has been predetermined.

Social media platforms don’t show you “the internet”—they show you a tiny, curated slice designed specifically for you. Of the millions of posts created daily, you’ll see maybe 0.001% of them. And that selection isn’t random or neutral.

The content that reaches you passes through multiple filters:

- What the platform’s business model prioritizes

- What keeps you scrolling longer

- What aligns with your existing views

- What advertisers pay to promote

This creates a powerful illusion of choice. You feel like you’re seeing everything worth seeing, making informed decisions based on complete information. But you’re actually trapped in what researchers call an “information bubble”—a carefully constructed reality that feels comprehensive but is actually extremely limited.

How Platforms Leverage Psychological Vulnerabilities for Profit

Social media platforms aren’t just tech companies—they’re behavioral engineering firms that have mastered the art of exploiting human psychology.

The endless scroll? Designed to trigger your brain’s reward pathways the same way slot machines do. Those notification badges? They tap into your fear of missing out. Even the slight delay before showing likes on your posts creates tiny dopamine-driven anxiety loops.

These platforms employ armies of behavioral scientists whose sole job is identifying and exploiting psychological vulnerabilities. They know exactly how to:

- Create intermittent reward schedules that maximize addiction

- Trigger social comparison that leads to increased usage

- Manipulate your fear of social exclusion

- Exploit your brain’s negativity bias for higher engagement

And it works astonishingly well. The average person checks their phone 96 times daily—once every 10 minutes they’re awake.

The business model is simple: harvest attention by any means necessary, package it, and sell it to advertisers. Your psychological wellbeing isn’t just irrelevant to this model—it’s often directly opposed to it.

Breaking Free: What They Don’t Want You to Know

Digital Detox Strategies That Actually Work

Big Tech has built an empire on your attention, but you can take it back. Forget those useless “put your phone in another room” tips that never stick. Here’s what actually works:

The 3-day reset: Go completely offline for 72 hours. Sounds scary? That’s the point. The first day is withdrawal hell, the second day you’ll feel lost, and by the third, you’ll rediscover what boredom and creativity feel like.

Time-blocking works better than time-limiting. Don’t say “I’ll only use Instagram for 30 minutes” – instead say “I only check social media between 12-1pm.” Your brain needs clear boundaries, not vague limits.

Replace, don’t remove. Nature abhors a vacuum and so does your routine. For every social media habit you cut, actively replace it with something better:

| Instead of | Try This |

|---|---|

| Morning scroll | 10-minute journaling |

| Bedtime feed check | Paper book |

| Commute browsing | Podcast or audiobook |

| Lunch break scrolling | Walk outside or call a friend |

Turn off ALL notifications except calls and messages from actual humans. Your brain can’t distinguish between urgent and trivial pings – they all trigger the same dopamine hit.

The most successful detoxers use “social contracts” – tell friends you’re going offline and ask them to hold you accountable. Shame is a powerful motivator.

Privacy Tools Big Tech Doesn’t Promote

The tools that protect your data exist – they’re just buried under mountains of settings or not mentioned at all. Big Tech doesn’t want you finding these:

Browser extensions that actually work:

- Privacy Badger blocks invisible trackers

- uBlock Origin kills ads and their tracking scripts

- Facebook Container isolates Facebook from seeing your other browsing

Search engines that don’t stalk you: DuckDuckGo and Startpage deliver Google-quality results without the creepy data collection. And no, using “incognito mode” doesn’t protect you – it just stops saving history on YOUR device.

Email alternatives that respect you:

- ProtonMail offers end-to-end encryption

- Tutanota provides encrypted storage

- Both are based in privacy-friendly European countries

Data poison pills: Services like “TrackMeNot” and “AdNauseam” create noise by generating fake searches and clicks, making your real data harder to extract from the noise.

The VPN truth: Most VPNs are terrible for privacy. Many log your activity and sell it. The few worth trusting include Mullvad (requires no personal info) and ProtonVPN (created by privacy advocates).

Two-factor authentication isn’t just for security – it prevents tracking through account linking. Use an app like Aegis (open source) instead of SMS.

Alternative Platforms With Ethical Business Models

Social media doesn’t have to be a surveillance nightmare. These platforms put you first:

Mastodon is Twitter without the toxicity or tracking. It’s decentralized, meaning no single company controls it. Nobody’s selling your eyeballs to advertisers.

Signal has replaced WhatsApp for privacy-conscious people. End-to-end encryption by default, minimal metadata collection, and funded by donations, not your personal information.

Brave browser pays YOU for ads (if you choose to see them) instead of selling your data behind your back. It blocks trackers automatically and lets you directly support content creators.

Element (formerly Riot) offers encrypted chat that works across platforms without mining your conversations for ad targeting.

The best part? These alternatives are growing fast:

| Platform | User Growth (2023-2025) |

|---|---|

| Signal | 340% increase |

| Mastodon | 215% increase |

| Brave | 180% increase |

What these platforms have in common: transparent business models, open-source code (meaning independent experts can verify they’re not spying), and governance that isn’t solely profit-driven.

Many have subscription options that cost less than a coffee per month. Think about it – you’ll pay $5 for coffee but not for services that protect your digital life?

How to Recognize and Resist Manipulative Design

Those app features making you check your phone 100 times a day? They’re called “dark patterns” – deliberately manipulative design tricks. Here’s how to spot and beat them:

The infinite scroll trap keeps you endlessly swiping without natural stopping points. Combat it by setting a timer before you open any social app.

“Breaking news” and constant alerts create artificial urgency. Ask yourself: “Will this matter in a week?” before engaging.

The dopamine slot machine: Variable rewards (likes, comments) that appear unpredictably are straight from gambling psychology. That’s why you keep checking – your brain craves the next hit.

Those red notification dots are specifically designed in that color and position to trigger your brain. Go into settings and disable all notification badges.

Manipulative language like “104 people saw your post but didn’t like it” creates social anxiety. Recognize the guilt trip for what it is – a trick to keep you engaged.

“Free” is never free. When you’re not paying with money, you’re paying with attention and data. Always ask: what’s the real cost?

Resist by using greyscale mode on your phone (Settings > Accessibility) which makes colorful apps less appealing to your brain’s reward center.

Building Meaningful Connections Outside the Algorithm

Algorithms don’t understand real human connection – they optimize for engagement, not fulfillment. Here’s how to build relationships the algorithms can’t measure:

Schedule actual phone calls. Voice creates intimacy that text can’t match. Start with a 10-minute catchup call instead of a text thread.

Create a “slow media” group where friends share content worth savoring – books, long-form articles, documentary recommendations – not just quick dopamine hits.

Join communities based on creation, not consumption. Workshops, clubs, classes where you make something together forge stronger bonds than passive scrolling ever will.

The proximity principle still works: Regular, unplanned interactions with the same people build deeper connections than scattered digital “keeping in touch.” Find your third place – not home, not work, but somewhere else where you regularly show up.

Write physical letters. Yes, with a pen. The effort creates meaning that instant messaging can’t replicate.

Practice “full attention” conversations – no phones visible, not even face down on the table. Studies show merely having a phone in view reduces connection quality.

Host a “screen-free Saturday” gathering monthly. The initial awkwardness quickly transforms into the kind of conversations you’ve been missing.

Social media platforms have carefully engineered systems designed to capture our attention and monetize our personal data while potentially harming our mental health. From intentionally addictive interfaces and extensive data harvesting to algorithmic manipulation and social engineering, these technologies shape our perceptions and behaviors in profound ways that companies rarely acknowledge. The psychological toll—including anxiety, depression, and isolation—remains largely unaddressed by the very corporations profiting from our digital engagement.

You don’t have to remain a passive participant in this system. By understanding how these platforms operate, implementing digital boundaries, practicing mindful technology use, and occasionally disconnecting completely, you can regain control over your online experience. Remember that these platforms are designed to serve their shareholders first—not your wellbeing. Taking back your digital autonomy isn’t just possible; it’s essential for your mental health and agency in an increasingly algorithm-driven world.